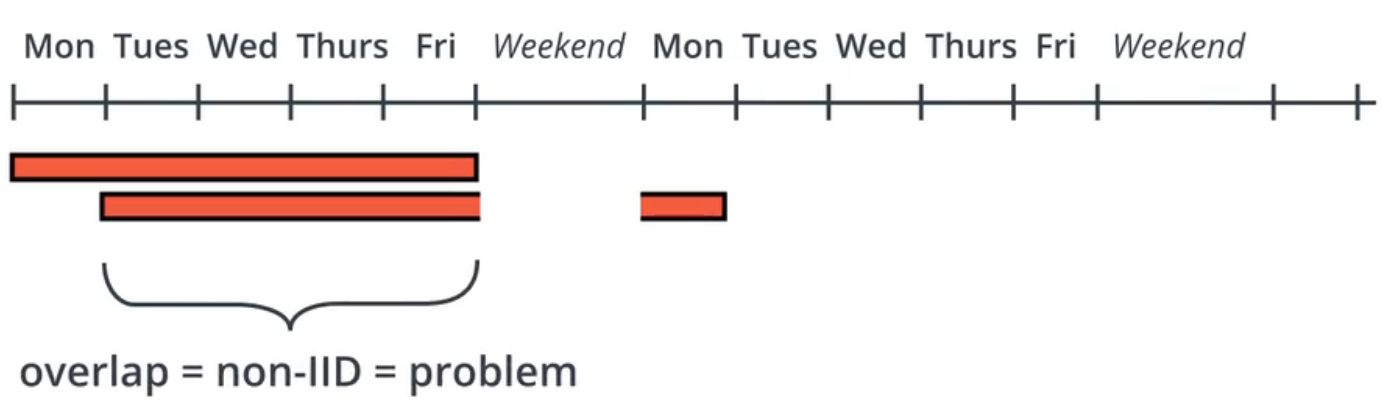

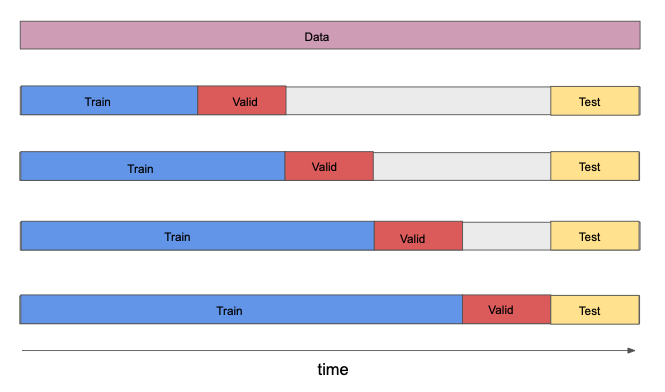

Use cross-validation to achieve just the right amount of model complexity.

使用交叉验证来实现适当数量的模型复杂性。

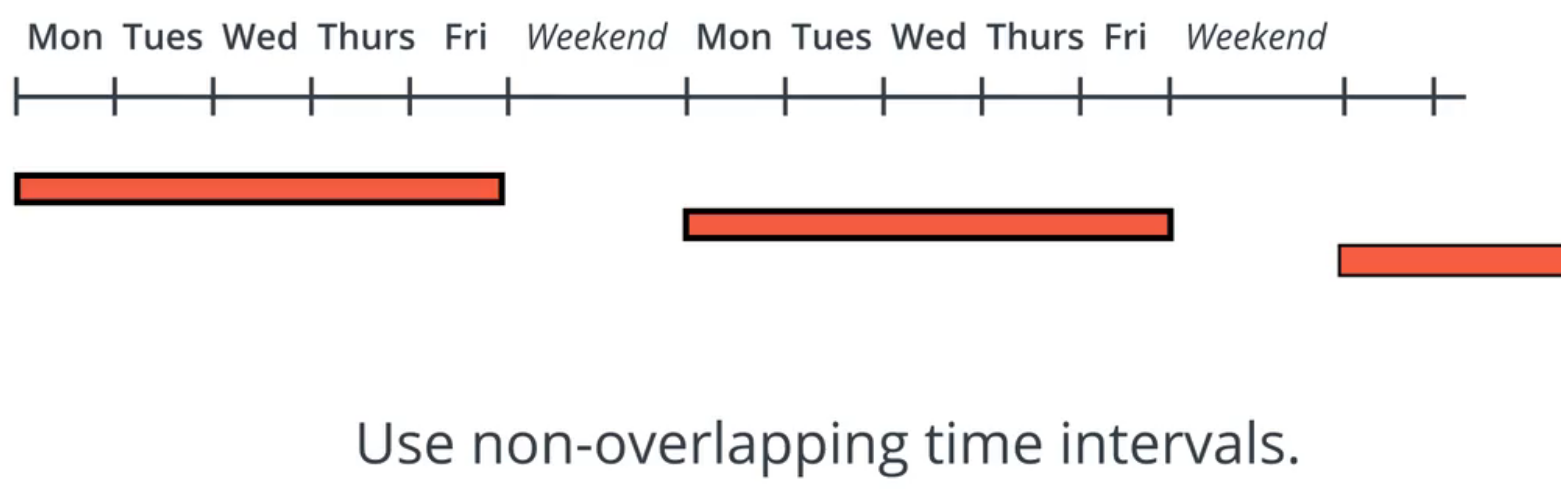

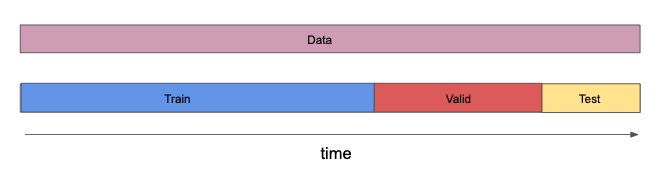

Always keep an out-of-sample test dataset. You should only look at the results of a test on this dataset once all model decisions have been made. If you let the results of this test influence decisions made about the model, you no longer have an estimate of generalization error.

始终保持样本外测试数据集。做出所有模型决策后,您只应查看此数据集上的测试结果。如果让此测试的结果影响有关该模型的决策,则您将不再具有泛化误差的估计值。

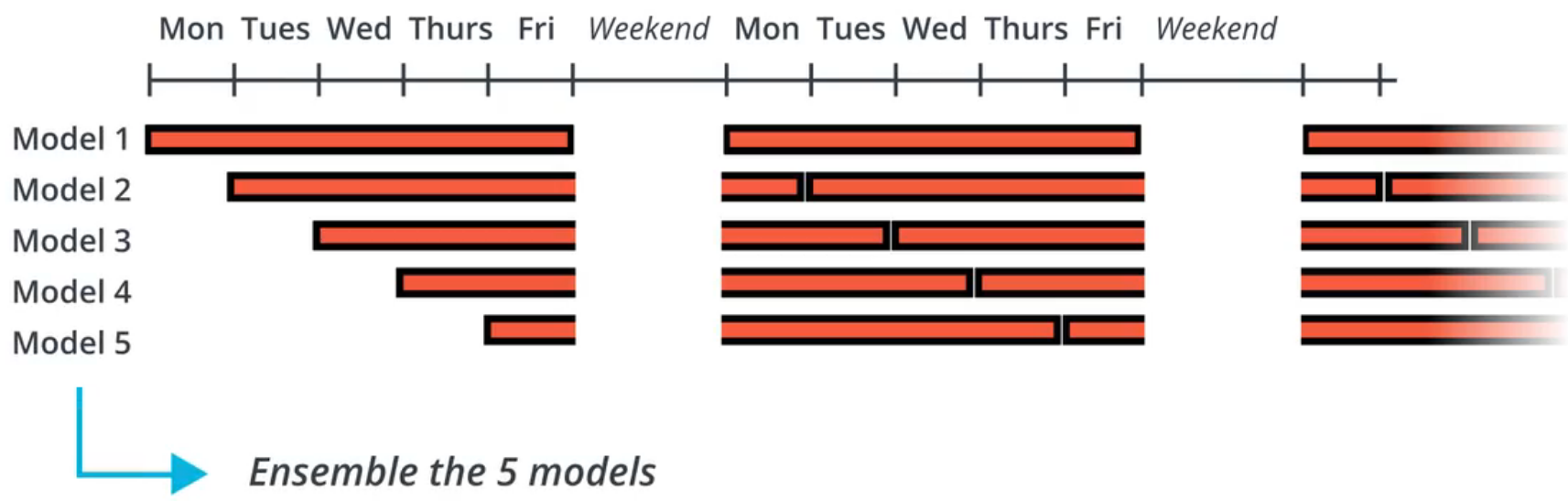

Be wary of creating multiple model configurations. If the Sharpe ratio of a backtest is 2, but there are 10 model configurations, this is a kind of multiple comparison bias. This is different than repeatedly tweaking the parameters to get a sharpe ratio of 2.

小心创建多个模型配置。如果回测的夏普比率为2,但是有10个模型配置,则这是一种多重比较偏差。这与反复调整参数以得到夏普率=2不同。

Be careful about your choice of time period for validation and testing. Be sure that the test period is not special in any way.

请谨慎选择验证和测试的时间段。确保测试期不以任何方式特殊。

Be careful about how often you touch the data. You should only use the test data once, when your validation process is finished and your model is fully built. Too many tweaks in response to tests on validation data are likely to cause the model to increasingly fit the validation data.

请注意您多久接触一次数据。验证过程完成且模型完全构建后,您仅应使用一次测试数据。对验证数据的测试做出的太多调整很可能导致模型越来越适合验证数据。

Keep track of the dates on which modifications to the model were made, so that you know the date on which a provable out-of-sample period commenced. If a model hasn’t changed for 3 years, then the performance on the past 3 years is a measure of out-of-sample performance.

跟踪对模型进行修改的日期,以便您知道可证明的超出样本期限的开始日期。如果模型已经3年没有变化,那么过去3年的性能就是衡量样本外性能的指标。

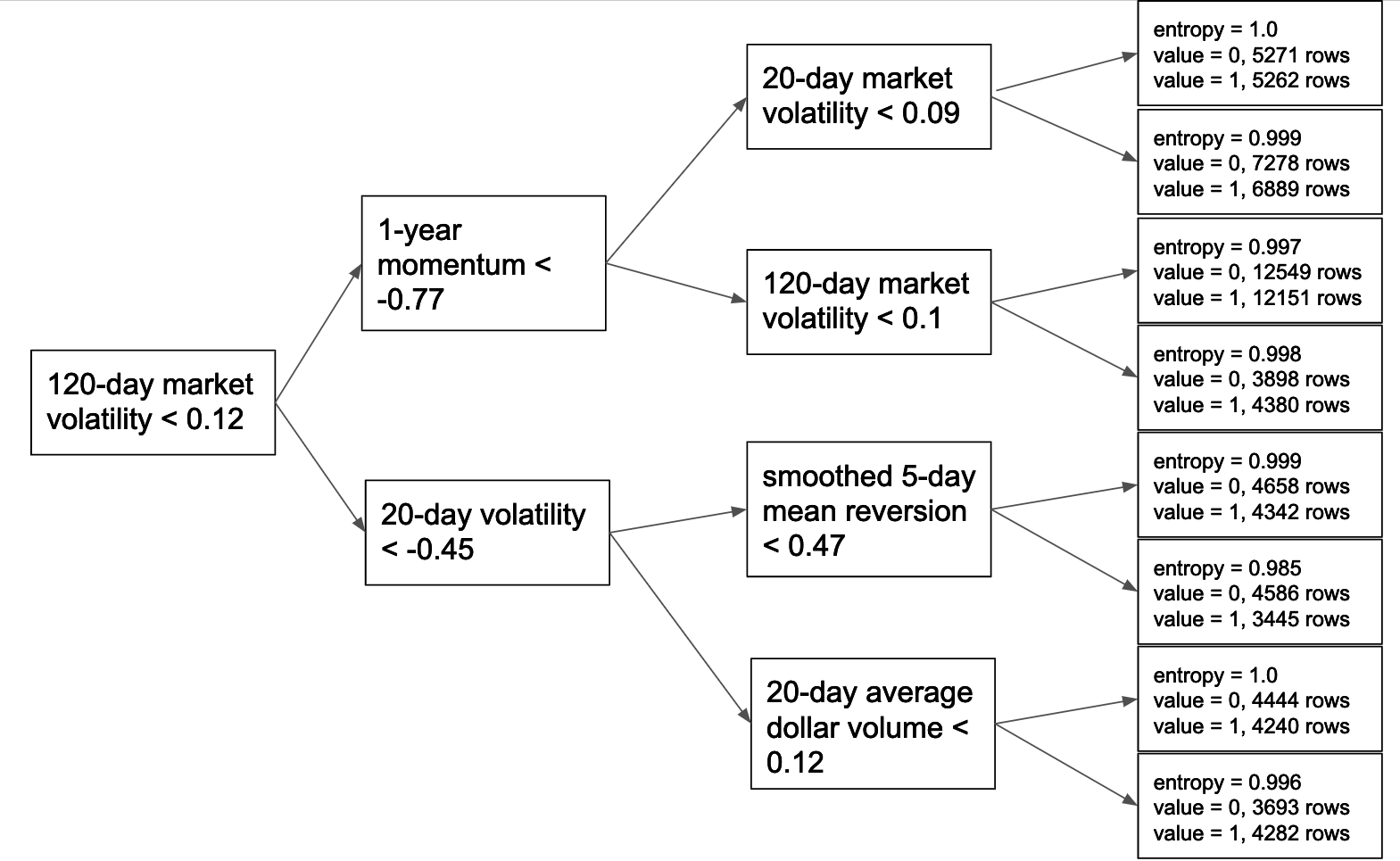

Traditional ML is about fitting a model until it works. Finance is different—you can’t keep adjusting parameters to get a desired result. Maximizing the in-sample sharpe ratio is not good—it would probably make out of sample sharpe ratio worse. It’s very important to follow good research practices.

传统的ML就是在模型起作用之前对其进行拟合。金融数据的ML有所不同-您无法不断调整参数以获得理想的结果。最大化样本内夏普比率不是很好-可能会使样本外夏普比率变得更糟。遵循良好的研究规范非常重要。

How does one split data into training, validation, and test sets so as to avoid bias induced by structural changes? It’s not always better to use the most recent time period as the test set, sometimes it’s better to have a random sample of years in the middle of your dataset. You want there to be nothing SPECIAL about the hold-out set. If the test set was the quant meltdown or financial crisis—those would be special validation sets. If you test on those time periods, you would be left with the unanswerable question: was it just bad luck? There is still some value in a strategy that would work every year except during a financial crisis.

如何将数据分为训练,验证和测试集,以避免结构变化引起的偏差?使用最近的时间段作为测试集并不总是更好,有时最好在数据集中使用几年的随机样本。您希望保留集(hold-out set)没有特别之处。如果测试集是量化崩溃或金融危机,那将是特殊的验证集。如果在这些时间段进行测试,那么您将面临一个无法回答的问题:这只是运气不好吗?除了在金融危机期间,每年都可以使用的策略还有一些价值。

Alphas tend to decay over time, so one can argue that using the past 3 or 4 years as a hold out set is a tough test set. Lots of things work less and less over time because knowledge spreads and new data are disseminated. Broader dissemination of data causes alpha decay. A strategy that performed well when tested on a hold-out set of the past few years would be slightly more impressive than one tested on a less recent time period.

Alpha会随着时间的流逝而衰减,因此可以说使用过去3或4年作为保留时间集是一个艰难的测试集。随着知识的传播和新数据的传播,随着时间的流逝,许多事情越来越少。数据的广泛传播会导致alpha衰减。在过去几年的保留测试中,一项性能良好的策略比在最近一段时间进行测试的策略更具吸引力。

Source/来源: AI for Trading, Udacity